Eval-Driven Development

AI systems don’t fail because they’re dumb, they fail because we never define “good.”

I’ve written before that evals might be the most valuable layer in the AI stack. Not models, not agents: evals. They’re the thing that defines what “better” even means.

In traditional software, tests come last — or first, if you believe in TDD. You build a thing, then write tests to make sure it works.

In AI, the eval should come first. Call it eval-driven development: EDD. It is the product. The north star. The compass for your development efforts and guiding models, tools, and scaffolding.

That’s why what Weco just launched feels like the start of something big.

The Eval as Engine

Their system takes in one simple thing: your evaluation script. That’s it. Define the goal — speed, accuracy, cost, whatever — and it automatically explores the solution space, generating and testing hundreds of variations to find what works.

It’s eval-driven development in its purest form.

I can’t help but think back to my CS 188 class with Pieter Abbeel at Berkeley. We built Pac-Man agents with a reward function: eat dots, avoid ghosts, don’t die. The only thing the agent “knew” was its eval. From that, it learned how to win.

Weco basically extends that idea to the messy, unpredictable world of software engineering and data science. Instead of a grid, it’s navigating your codebase and solution space. Instead of avoiding ghosts, it might be reducing your GPU bill.

They already proved the idea with their open-source system, AIDE, which hit #1 on MLE-Bench (the OpenAI-run benchmark for ML engineering). Now they’ve turned it into a commercial product that can attack any well-defined optimization problem:

Speed up this kernel by 50x.

Reduce this model’s loss by half.

Find the cheapest prompt that maintains quality.

It’s not “AI building AI.” It’s AI exploring the solution space on your behalf, guided by your eval.

The Eval as Interface

What has me fired up is how people are using it.

An astrophysicist is optimizing prompts for a vision-language model.

A startup is balancing inference cost vs accuracy across model versions.

A researcher used it to make a PyTorch kernel run 7x faster.

The pattern is the same: once you have a crisp definition of “better,” Weco can run nonstop experiments to get you there.

That’s what makes evals so powerful, they’re universal interfaces for improvement. As soon as you can measure something, you can automate progress against it.

Claude Code vs Weco

Everyone’s going to ask if this competes with Claude Code, Codex, Cursor, etc.

I actually think they’re complementary.

Claude Code is for active software engineering — building new systems or UX improvements without a clear metric yet. You’re reasoning, improvising, designing. It’s interactive. The focus is on building de novo.

Weco is for the opposite phase — when the goal is quantifiable. When you can say “make this 2x faster” or “cut cost by 30%.” Then you hand it off, and it keeps grinding overnight, over the weekend, while you sleep. The focus is on iterating and improving what already exists.

Claude Code helps you build.

Weco helps you evolve.

Both are part of the abstraction layer emerging between humans and machines: we define goals, they explore.

The Right Harness

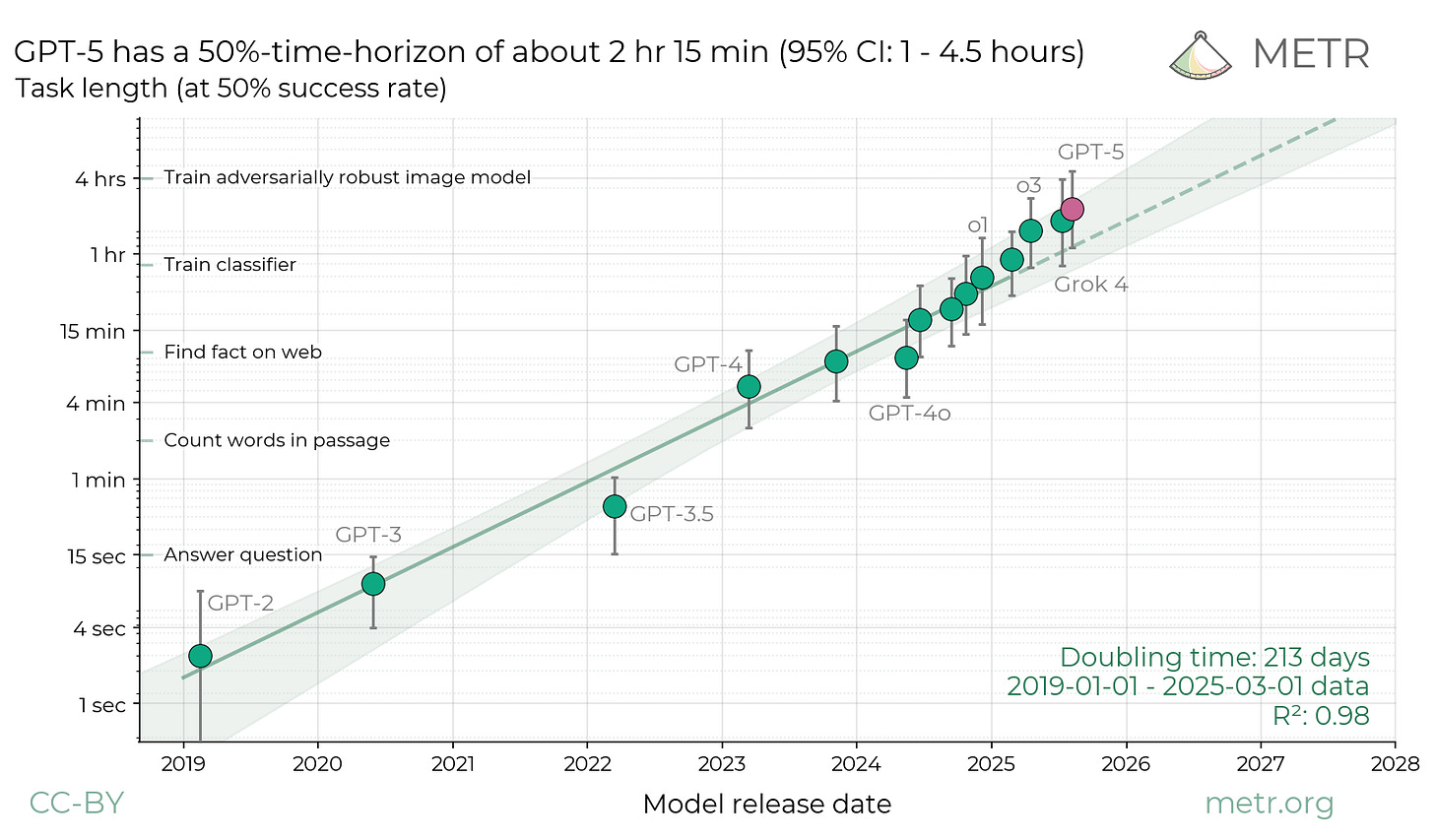

The recent METR study on GPT-5 found that it hits a 50% success rate on complex software engineering tasks at around 2 hours and 17 minutes. That’s impressive, but also a reminder of what’s being tested: the model, not the system around it.

What Weco shows is that the real leverage isn’t in squeezing more IQ points out of the model; it’s in building the right harness around it. Give GPT-5 an eval, a loop, and a search process and it can do far better on long-range tasks.

The Shift

Evals are the top-level abstraction in the AI stack.

LLMs help us generate.

Agents help us automate.

Evals help us direct.

They’re the connective tissue between human intention and machine action, the way you encode what you actually want.

The companies that figure out how to operationalize evals, how to turn fuzzy goals into measurable ones, will compound faster than everyone else. Because once you can measure it, you can automate improvement against it. And that loop, running 24/7 with human tuning and supervision, is a moat.